Artifact: SHADERS!!!

I must profess something. I am in love. I’ve known about and worked a little bit with shaders before, but not until now that I sit down and actually revisit the area do I remember the beauty that is shaders.

For those of you who do not know, shaders are used for real time post-processing effects in video games (there are other areas of implementation, but it’s mainly purposed for games). Since we’re working with SFML which uses OpenGL, our shaders must be written in GLSL (OpenGL Shading Language). The syntax is very simple, so it’s not hard to write in, but there is a learning curve being that there are things you need to know beforehand to write in it (certain functionality and such). I will not bore you with the details, but it is a very powerful tool to make your game *POP*.

There are four types of GLSL shaders; fragment, vertex, tessellation and geometry shaders. Making a 2D game, I’m only looking at fragment shaders at the moment, seeing as all planned shaders are based on pixels on screen.

So, let’s talk about what shaders we’re using, what we’re planning to use and, most importantly, why we want them.

The last couple of weeks we’ve talked a lot about feedback, and how we wanted as much as possible be conveyed to the player through graphical feedback rather than explicit information. That’s where shaders come in.

Gray-scale shader:

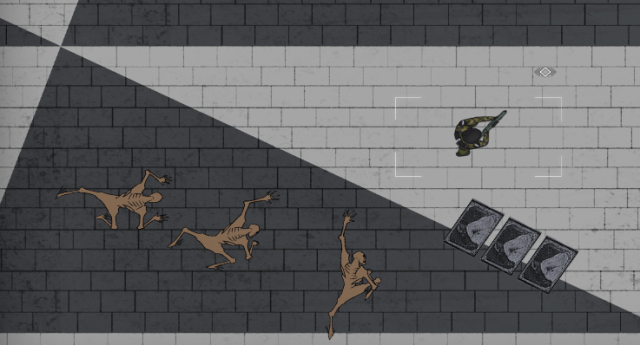

We wanted to convey the player’s current health level through several coordinated effects. One of these effects is to decrease saturation of the screen based on how low on health the player is.

The current implementation also allows for modification of brightness and contrast, if that would be at any point desired.

The implementation is fairly simple. Brightness simply multiplies the color value per pixel by a brightness factor. For gray-scale you simply take the red, green and blue value per pixel and set them to their average. Trying to fix contrast, on the other hand, proved a bit more difficult. The concept itself is easy. You subtract the color gray from your current color value, multiply it by you contrast factor and then add the gray color value again. The problem I stumbled upon was not knowing what values to use. Knowing that some color scales use the interval of 0-255 of integers to represent color while others use hexadecimal values and some use a floating point value between 0 and 1, I had to do some guesswork, since the information seemed to be unobtainable, despite my numerous, creatively constructed google searches. It turns out that it varies. IT VARIES! WHY DOES IT VARY!? In this particular instance, it turned out to be a floating point value between 0 and 1, and so the problem is now solved!

“Glitch” shader:

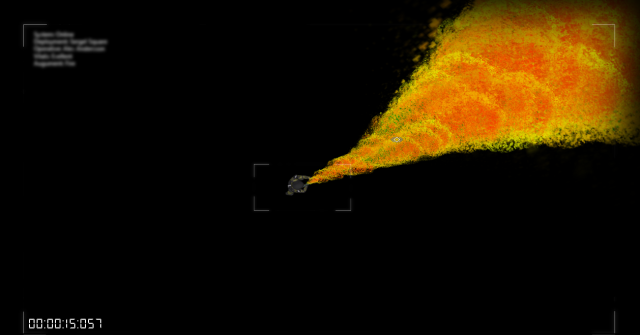

Since the style of the game is as it’s seen from a satellite view, we wanted taking damage to temporarily “damage the up-link”, and having the screen jitter as a result. This, combined with a buzz effect, using a simple random noise filter, the effect should feel authentic. This shader is not currently on high priority, since we have other things we need to complete first, but I would really love to get to do it.

Light shader:

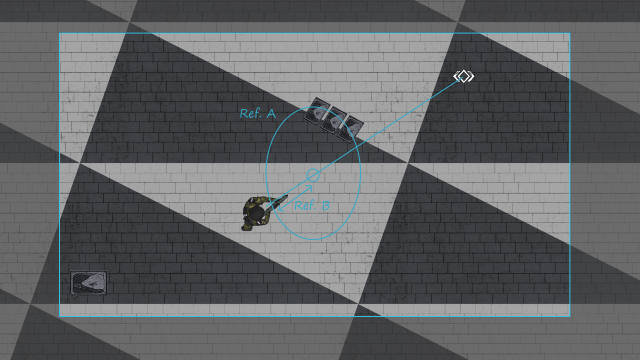

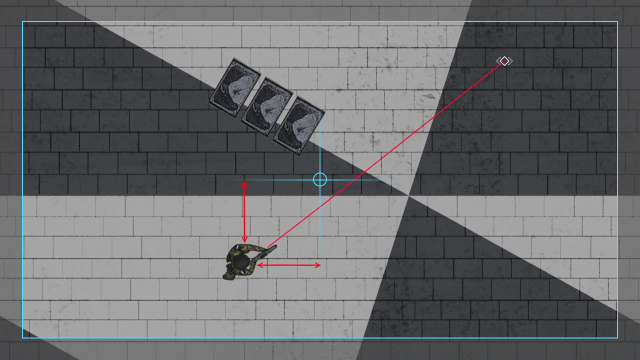

We have scrapped this feature, but originally we intended to use dynamic lights in the game. Calculating the shape of the light would of course be done in C++, but applying the light to the environment is best done with shaders. Again, it’s not an incredibly difficult thing to achieve, and there are a lot of fun things that can be done with it, such as simulating 3D surfaces with normal maps. I might end up adding some simple lighting if time allows it, just to make it looks extra good, but gameplayability has priority.